Despite gloomy economy, signs good for billion-dollar US telescope

DOI: 10.1063/PT.3.1710

The hundreds of scientists and engineers involved in the Large Synoptic Survey Telescope (LSST) breathed a collective sigh of relief on 18 July, when the National Science Board gave the project its blessing. The project was the top priority in the 2010 astronomy and astrophysics decadal survey. And last year, says LSST director Tony Tyson, an independent design review “found no faults with the project. [The reviewers ] said we were ready to cut metal.”

“We can call this the end of the beginning,” says Nigel Sharp, who oversees the project at NSF. The National Science Board’s approval means that NSF can ask Congress for money for the LSST. NSF is funding the telescope, site infrastructure, and data system, and the Department of Energy is building the camera. Of the total estimated $665 million construction tab, 70% is from NSF, 24% is from DOE, and 6% is from private donors. NSF’s fiscal year 2014 budget request to Congress won’t be public until next February, but if funding for the LSST is included—and then appropriated—construction would begin that year, and operation could commence in 2021.

The 8.4-m LSST will image large swaths of sky with unrivaled depth and detail as it scans the visible sky every few days from its site in Cerro Pachón, Chile. Over its 10-year lifetime, the LSST will generate more than 100 petabytes of data on 20 billion objects. The four broad science thrusts of the telescope are cosmology, the structure of the Milky Way, the structure of our solar system, and the variable universe. Two big goals are studying dark energy and cataloging near-Earth objects. “One thing about massive amounts of data,” says Sharp, “is that you get enough of rare things that you get a sample you can study. This . . . opens up a new parameter space by viewing objects over time. History shows that any time you open up parameter space you find things you weren’t expecting.”

Technical advances

The wide, deep, fast sky survey is possible thanks to the confluence of technical advances in three areas: microelectronics, computation, and large aspherical optics fabrication. Chips can squeeze in more transistors, and silicon CCD cameras can pack more pixels. The LSST camera gives an “unprecedented wide and crisp image,” says Tyson. The LSST will image the sky in six wavelengths from UV to near-IR (0.3–1.1 µm), taking pairs of 15-second exposures and reading out the camera’s 3.2 billion pixels in under 2 seconds. The 2700-kg camera is 1.65 m in diameter and 3 m long to accommodate the optics, shutter, filters, electronics, and focal-plane array of 189 CCDs.

The LSST will broadcast alerts on objects whose brightness has changed since they were last imaged. The goal is to broadcast within 60 seconds of collecting images, so that other telescopes can follow up on interesting objects. “This will be things that go bump in the night,” says LSST data-management project scientist Mario Juric. “Things that explode, and we don’t know what they are.” With an estimated 2 million alerts each night, people will be able to program “event brokers” to specify what characteristics they want to be alerted about. “We asked astronomers if they wanted us to filter the data,” Juric says. “They said no. Interesting depends on your point of view.”

Every year the LSST team will create a comprehensive catalog of data going back to the start of the survey. Measured characteristics will include such things as an object’s color, shape, brightness as a function of time, and relationship to other objects. The alerts will be key for transients, says Juric. “For the other three science goals, the deep catalogs will be more useful.”

Depending how you count, says Juric, “in one to two nights, we will produce the same amount of data that the Sloan Digital Sky Survey [SDSS] collected in 10 years. The difference in scale is huge. And the analysis will have to be automated.” The final image archive will contain roughly 75 petabytes of data, and the final catalogs of processed data will be nearly 50 petabytes. “We’ll need hundreds of teraflops. By 2020 we will comfortably be able to do that,” Juric says.

To access the vast quantities of data, scientists will submit queries to LSST machines at the National Center for Supercomputing Applications in Illinois. Among the many queries that LSST scientists have been testing on simulated data are “find quasars,” “extract the light curve” for a given object, “find all galaxies brighter than a given magnitude,” and “how many objects cross the orbits of both Jupiter and Saturn?” Such questions will be submitted in Standard Query Language. “Experience from the SDSS shows that more than 90% of interesting questions can be answered in this way,” says Juric. The LSST will allocate about 10% of computer time for more complex questions that don’t fit the query approach.

Dramatic mirror

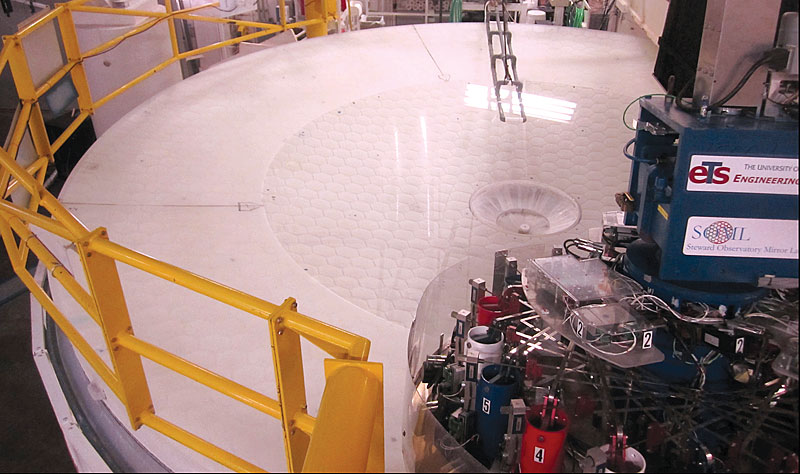

The LSST gets its wide field of view—9.6 square degrees, or about 45 times the size of the full Moon—from a three-mirror design. “Any fewer mirrors does not allow corrections of aberrations over such a wide field,” says Roger Angel, director of the University of Arizona’s mirror laboratory. The LSST’s primary and tertiary surfaces are polished into a single 8.4-m glass blank (see image on page 22); the mirror has been cast and is now being polished, thanks to gifts totaling $30 million from the Charles Simonyi Fund for Arts and Sciences and from Bill Gates. The monolithic design “is dramatic,” says Angel. “With a deep bowl set within a shallower bowl, it’s like no other mirror.”

Casting the primary mirror (outer 8.4-m dish) and tertiary mirror (inner 5-m dish) out of a single piece of glass saves money and reduces alignment problems for the Large Synoptic Survey Telescope.

LSST/ UNIVERSITY OF ARIZONA STEWARD OBSERVATORY MIRROR LAB

The LSST is not the first telescope with three mirrors. But when Angel built one 30 years ago, “the only CCDs you could get were 500 pixels square. And the computers affordable then were even worse. Even this one small [CCD] chip was giving us more data than we could handle,” he says. “I didn’t look at wide-field telescopes for years.”

Weak lensing, powerful tool

LSST scientists aim to measure the shapes and positions of about 4 billion galaxies. By using stars to determine the distortions due to optics and the atmosphere, they can isolate the subtle effects of weak lensing by intervening mass. “What we care about is measuring the shapes of galaxies well, and at different distances and redshifts, so we can see the evolution of dark matter as a function of cosmic time,” says Princeton University’s Michael Strauss, who heads the LSST science advisory committee. “[These measurements] become a powerful tool to constrain dark energy.”

Shedding light on dark energy is the main draw for DOE. But some of the instruments, the enormous volume of data, and the sociology of the collaboration are in many ways more typical of the high-energy physics experiments that DOE engages in than of the largely single-investigator science that NSF is usually involved with. Steven Kahn, of SLAC and Stanford University, heads DOE participation in the LSST and recently cofounded the 150-member LSST dark-energy science collaboration. “We have involved people trained as particle physicists,” he says. “Not only because they are attracted to working on dark energy, but also because the skills they bring—statistical analysis of large datasets—are highly relevant to these kinds of investigations.”

Worldwide support

“The time when astronomy was the science of looking at one object is past,” says Juric. “We are looking at populations.” The shift to data processing and statistics may “make astronomy less romantic, but it’s happened,” he says. “And it is empowering. If you are a graduate student or a scientist at an institution with limited access to world-class telescopes, it levels the playing field.”

In the run-up to the National Science Board approval, NSF and DOE asked the LSST collaboration to seek help paying for the telescope’s operations. In just a few months, Tyson drummed up letters from 68 institutions in 26 countries, in which the institutions pledged to support operations. NSF and DOE will cover three-quarters of the $37 million annual costs, with the rest paid through yearly subscription fees of about $20 000 per principal investigator. The alerts will be open to anyone. But only scientists based in the US or in host-country Chile or who are subscribers will have unlimited access to the data.

For now the door is closed to new members, says Tyson. The early pledgers “did a huge amount of work to overcome political problems and get signatures in a short time.” Tyson says the collaboration will leave open the possibility of others joining later under different terms. “But if you want to do cutting-edge science, you better get close to the experiment early on.”

More about the authors

Toni Feder, tfeder@aip.org