Job count is the leading indicator of basic research benefits

DOI: 10.1063/PT.3.1210

With the US government focused on deficit reduction, justifying the worth of federally funded research has arguably never been more important. Spending cuts are likely to be the norm, rather than the increases that President Obama requested in February for science and technology programs, and basic research, historically held in high regard on both sides of the aisle, will do well to break even in fiscal year 2012.

The House bill that provides FY 2012 funding for the Department of Energy demonstrates lawmakers’ attitudes toward basic research: In that bill, appropriators order DOE to conduct performance assessments of its basic research programs. The measure takes particular aim at the portfolio of investigator-initiated research grants that make up four-fifths of the DOE Office of Science’s $855 million basic energy sciences program. The House bill instructs the office to carry out performance reviews of those grants and to terminate $20 million worth of the lowest-performing ones.

Jobs numbers, one measure of basic research impact, have received much attention in the current economic climate. The American Recovery and Reinvestment Act (ARRA) of 2009, which poured $20 billion into basic research programs across the federal government, had as its primary goal the creation or retention of jobs. Recipients of grants funded with ARRA monies are required to provide quarterly reports to the Recovery Accountability and Transparency Board, an interagency group of auditors, on the number of jobs that those grants support. The data, posted at the Recovery.gov website, indicate that in the first quarter of this calendar year, more than 21 300 research-related jobs resulted from ARRA-funded grants awarded by the National Institutes of Health and 4307 jobs were supported by NSF’s ARRA-funded grants. In that same period, basic research grants from DOE supported just 659 jobs; most of the $1.8 billion the Office of Science received from ARRA was directed to infrastructure upgrades at the national laboratories. By contrast, DOE reported that 29 000 jobs resulted from ARRA funding for its applied research programs in energy efficiency and renewable energy.

The jobs numbers at Recovery.gov count full-time equivalent (FTE) jobs supported by ARRA in the first quarter of 2011. But adding up all the quarterly job numbers since the act took effect in 2009 won’t provide the total number of R&D-related jobs it generated. Many positions in sponsored research go to graduate or undergraduate students whose jobs might last only a semester or two. And principal investigators are often supported by multiple grants. In those cases, a widely accepted formula is used to determine the fraction of a job that is attributable to each award.

A proposal and award factory

The emphasis on jobs that came with ARRA was novel to the federal research bureaucracy. “With the stimulus, there was a huge push from the Hill to say who’s going to be supported by science funding. And we really weren’t able to answer that question,” says Julia Lane, director of the science of science and innovation policy program at NSF. “Science agencies had really never been asked before to document the results of their investments. What they’ve been asked to do is identify and fund the best research, and the entire data system reflects that.” She describes the existing system as “a proposal and award administration factory.”

Lane is the codeveloper of the Star Metrics database system, which is now collecting data from dozens of participating universities on jobs supported by federally sponsored research (see PHYSICS TODAY,August 2010, page 20

Measuring the full economic impacts of ARRA-funded or other taxpayer-supported basic research is complicated. Some quantitative metrics are the number and impact of research publications that result and the patents awarded. But qualitative effects, including new drugs, medical devices, and new fields of research that may result, are a necessary component of the evaluation, said NIH’s Luci Roberts, a panelist at a June symposium of the American Association for the Advancement of Science (AAAS). Eric Toone, a program manager for DOE’s Advanced Research Projects Agency–Energy, which awards grants to help develop new clean-energy technologies, said that “the only metric that matters is getting technologies into the marketplace.” But commercialization of products requires many years, he acknowledged, so the agency uses the amount of private capital attracted to a project as a “surrogate metric.”

More grants, less quality

Harvard University economist Richard Freeman, another panelist at the AAAS event, predicted that ARRA would have consequences similar to the aftermath of the five-year doubling of the NIH budget, completed in 2003. Freeman presented data showing that the mean number of scientific publications, impact factors, and numbers of patents per grant had declined as the number of NIH research grants swelled; the numbers indicated that grants that would not have made the cut before the doubling were dragging down the overall productivity of the agency’s grant portfolio. “[I] assume that something similar happened with the ARRA money,” Freeman said. “There was a lot of money that was pushed out that would have been better spent over a longer period of time.”

But Freeman said that ARRA was unlikely to reproduce the other major negative impact of the NIH doubling—the building of new laboratories and other research infrastructure in anticipation of continued large annual budget increases that failed to materialize. In ARRA’s case, recipients were aware up front that the awards were one time only.

John Marburger, science adviser to former president George W. Bush, says Star Metrics provides “very powerful tools” in arguing the case for greater funding for science and technology. “You can’t get Congress to buy into anything rational without a credible intellectual effort,” says Marburger, who contrasts the Star Metrics approach with the “slick advocacy” efforts he recalls from his years at the White House. The influential 2007 National Academies report Rising Above the Gathering Storm was composed without those tools, he notes, and despite agreeing with most of its recommendations, Marburger views that report as an attempt to “scare Congress into spending more money.”

There are risks to measuring research. Scientists may view the evaluation process as a threat or an auditing tool. “You may find the answer that you don’t want,” Stefano Bertuzzi of NIH, the codeveloper of Star Metrics, told the AAAS symposium. And no matter how good an evaluation process is, it is just one of the factors that lawmakers will consider in determining research policy and resources, said Chris King, a Democratic staff member of the House Committee on Science, Space, and Technology.

David Croson, a program director at NSF, told the AAAS gathering that one NSF grant funded by ARRA counted patent applications during the recession. Researchers found that the number of patent applications fell more steeply in most fields than the gross domestic product declined. The exceptions were in nanotechnology, biomass, and wind and solar energy, the fields that were specifically targeted by the stimulus spending. Another NSF-funded grant showed that spending on applied research, as opposed to basic research or development, created the “biggest short-term bang for the buck” in terms of its economic impact.

More red tape ahead?

Although ARRA-sponsored research will dwindle in the coming months, added reporting requirements could become its legacy. As PHYSICS TODAY went to press, a bill that would extend ARRA paperwork standards to all federal research grants seemed destined for passage in the House of Representatives. The Digital Accountability and Transparency Act (H.R. 2146) would establish a universal standard for recipients to file reports for federal grants and contracts directly into an interagency database, such as Recovery.gov. The legislation has bipartisan support and was approved in June by the Committee on Oversight and Government Reform. A counterpart bill was introduced in the Senate on 16 June by Mark Warner (D-VA).

The Association of American Universities, the Association of Public and Land-grant Universities, and the Council on Governmental Relations have decried the bill. They maintain that the extra paperwork adds $7900 in costs per ARRA award. Unlike other nonprofit groups and private contractors, which can recover their paperwork costs from the funding agency, universities aren’t allowed to bill administrative costs that exceed 26% of the value of a grant. Most large research universities claimed costs in excess of the cap well before ARRA came along. One 2005 survey found that faculty involved in federally sponsored research spent 42% of their time on administrative activities, not on the research itself.

Not surprisingly, Star Metrics’ automated data reporting system is attractive for academia. William Valdez, an official at DOE’s Office of Science, says that once the Star Metrics software is added to the institutions’ administrative networks, data on jobs are captured as they are entered and are fed into the Star Metrics database. No intervention by investigators is required, he says. As of early July, 80 universities had signed up to provide data on grants sponsored by the participating agencies. Lane and her colleagues will begin work this fall on what she calls phase 2: building out Star Metrics to factor in other economic, scientific, and social benefits of research. Patents, papers, and spinoff companies will be taken into account, but so will anecdotal information on the outcomes, she says, adding that anecdotes are useful only if they accurately reflect the quantitative results.

Lane insists that Star Metrics will remain voluntary. “You get much better data and much more innovation if it’s voluntary,” she said in a phone interview from Japan, where she spoke at a conference on measuring R&D there. “If you get the research institutions engaged in figuring out how we get information for the public about the results of science investments, then they create things. But if it’s mandatory, they just send the data.”

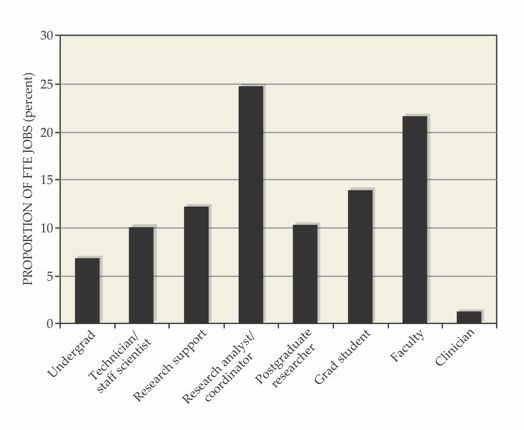

Only around one-fifth of the full-time equivalent (FTE) jobs traceable to most federally sponsored basic research were faculty positions, according to data from 55 large research universities that participate in the Star Metrics data-gathering system.

NSF

More about the authors

David Kramer, dkramer@aip.org