Cancer, brain research, and supercomputing

DOI: 10.1063/PT.3.3077

When computers capable of working at the exascale level (1018 floating-point calculations per second) come on line, they will be brought to bear on figuring out how another, quite different computer, the human brain, works. With that goal in mind, Energy secretary Ernest Moniz and National Institutes of Health director Francis Collins are exploring how to bring the Department of Energy, which houses the nation’s leading supercomputers, into the presidential initiative known as BRAIN (Brain Research through Advancing Innovative Neurotechnologies; see Physics Today, December 2013, page 20

The brain is just one area of biomedical research that could benefit from the computational and physical sciences expertise at DOE and its national laboratories. In December Moniz asked his Secretary of Energy Advisory Board (SEAB) to look for ways to increase DOE’s contribution to biomedical sciences. A SEAB task force, cochaired by former NIH and National Cancer Institute (NCI) director Harold Varmus and former DOE undersecretary Steven Koonin, will report to him in September.

The BRAIN Initiative will require advances across several scientific fields. “We need better ways of detecting and recording neural signals,” says Roderic Pettigrew, director of NIH’s National Institute of Biomedical Imaging and Bioengineering. “Then we need analytical tools to interpret those signals. We need ways of deciphering meaningful signals from noise, an area DOE scientists are accustomed to dealing with.”

Another area of focus is the modeling of what goes on in the brain, resolved in three dimensions and in time. “People often don’t think of the time domain of medical data,” notes Pettigrew, the designated liaison to DOE. “But life is temporal, and biological dimensions change in the time domain. Proteins fold and unfold, protein receptors go from inactive to active state.”

In October representatives from the two agencies held a jointly sponsored BRAIN workshop at Argonne National Laboratory that coincided with a major neuroscience conference in nearby Chicago. Reports from those discussions were delivered to Moniz and Collins but haven’t been made public.

“There is a lot of opportunity and a lot of need in the neuroscience community to benefit from the tools and the organization of the labs to do this kind of big project,” Moniz told reporters in November, days before issuing his charge to SEAB.

Dimitri Kusnezov, chief scientist for DOE’s National Nuclear Security Administration, is involved in discussions with NIH. “The question we’re asking ourselves is, Are there real wins in pushing diagnostics—for example, in a multimode analysis—or is the community geared to move forward at the same pace anyway?” he says. “Can we accelerate things in a significant way or not? We don’t have the answer yet.”

Biomedical research has long benefited from DOE assets. Life-sciences researchers represent the single largest sector of users (about 40%) at the DOE national laboratories’ x-ray light sources, half of whom are supported by NIH. And initial genome-sequencing work at Los Alamos National Laboratory begat the NIH-led Human Genome Project.

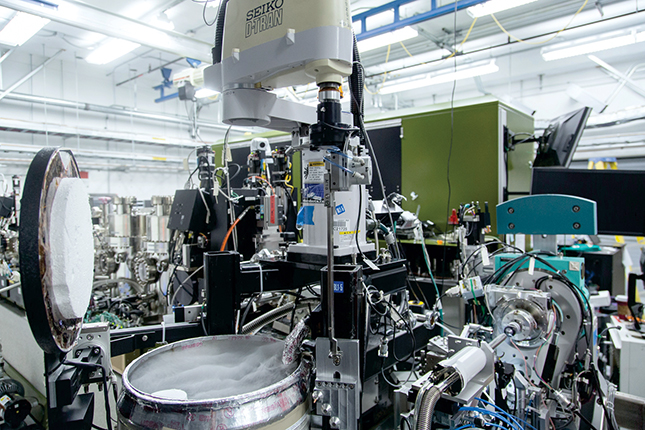

A highly automated, robotic x-ray crystallography system at SLAC’s Linac Coherent Light Source x-ray laser. The metal drum at the lower left contains liquid nitrogen for cooling crystallized samples. This setup was used to explore the molecular machinery involved in brain signaling in atomic-scale detail.

SLAC

The nanoscale-science research centers operated by the national labs and other groups have been developing sensors that can read nanoparticles. “It’s conceivable that nanoparticles with certain characteristics can be embedded in a living system like a brain,” says Steve Binkley, associate director for advanced scientific computing research in DOE’s Office of Science. “And one could then also conceive of reading the signals coming out of them. The holy grail is to get real-time mapping of signals that exist in neurons as a function of time to certain stimuli,” he says. Such mapping has been done with mice, but scientists used invasive probes not suitable for research on humans.

Imaging is another DOE strength that will be useful to BRAIN, Binkley says. The labs have expertise using UV, x rays, IR, coherent light sources, and lasers for imaging. “It’s often not obvious at the outset how one puts all those things together to image a certain type of thing. I’ve come across people in the lab system who are really good at knitting together the right pieces to be able to image a particular thing,” he says. “Very often the physical processes underlying a given phenomenon are elucidated by being able to image them.”

Computing and cancer

One focus area of increased DOE–NIH collaboration already under way is high-performance computing at the NCI, says Kusnezov. “We started talking to the NCI to understand if computing opens the door for opportunities in their agency that simply wouldn’t happen [otherwise]. Because the expertise is largely resident at DOE labs.”

Achieving exascale computing— expected in the early 2020s—will entail developing components that could prove useful by themselves, such as 100-teraflop desktop systems powered from a standard 15-amp wall socket, says Kusnezov. “You could have a teraflop chip that is wearable. With that amount of computing you could think about sticking a 100-teraflop system under an intensive care unit bed to do some machine learning and data analytics,” he says. That would be a boon to the presidential Precision Medicine Initiative, which seeks to develop therapies tailored to the individual.

Performing detailed calculations on individual molecules is one area in which high-performance computing contributes to medical science today, says Binkley. “A lot of the properties in biological systems that are of interest trace back to molecules that are involved in cells. Any time you make an advance in the ability to calculate molecular properties, especially large molecules, it has impacts on biological science.”

High-performance computation also accelerates the analysis of the large data sets that are produced from genomic studies. “It allows a more systematic approach to understanding how individual genes play in the functionality of organisms,” says Binkley. The ability to deduce the function of a protein from its gene sequence is the ultimate goal, he says. “That’s something we’ve dreamed about and we’re not there yet. But eventually, as computer power goes up, we’re hoping to make progress in that area.”

In a pilot interaction, DOE’s computational expertise is being applied to an NCI effort to improve our understanding of mutations in a family of genes that drive more than 30% of all cancers, including 95% of pancreatic cancers. Those so-called Ras genes encode for signaling proteins that bind to cell membranes in particular ways. Under a 2003 initiative, the NCI has been exploring innovative ways to attack mutant forms of the Ras proteins and ultimately to develop new therapies for the cancers they cause.

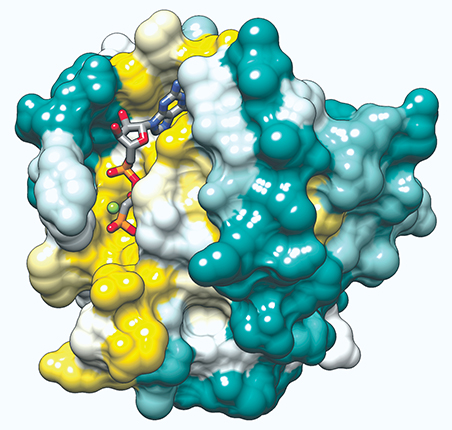

Structure of the HRas protein. Mutations in the genes that encode for Ras proteins cause about 30% of cancers.

ELAINE MENG, UCSF VIA WIKIMEDIA COMMONS

X-ray crystallography, cryoelectron microscopy, and other imaging techniques have generated vast amounts of physical, chemical, and biological information on Ras and Ras variants. “The idea is to take the Ras molecule and various Ras mutations and start to tease them apart from a physical and chemical characterization standpoint,” says Warren Kibbe, director of the NCI’s Center for Biomedical Informatics and Information Technology. Then it may be possible to understand how a given mutation affects the interaction of Ras with the membrane. “Rather than having to generate all this data from every single molecular change, is there a way to start doing simulations that allow us to either augment or replace some of these various, very tedious experimental methods?” Kibbe asks.

Molecular dynamics, density functional theory, and other tools used by DOE computational scientists to produce simulations of materials systems are directly relevant to the Ras Initiative, Kibbe says. The physical characterization data sets that the NCI has already generated on different Ras variants can be used in refining new simulations that DOE develops.

Potentially transformative

The NCI’s supercomputing infrastructure is dwarfed by DOE’s, Kibbe says. Rather than trying to duplicate the kind of computing resources that DOE has, the NCI wants to “help inform the next generation of supercomputers at the national labs so they are appropriate for biomedical research.” Currently the NCI has access to computers that can either handle large amounts of data or focus on computation. What it needs today are machines that do both, Kibbe says.

The collaboration, which Kusnezov says must be mutually beneficial to work, will be “potentially transformative” for DOE. “We see an opportunity to put machine-learning chips and big-data analytic chips together with the [central processing unit], and have them work together,” he says. “Traditionally when we’ve designed the next-generation architectures, it’s been toward a more traditional view of high-performance computing: solving partial differential equations, evolving things in time, doing some large Monte Carlo [simulations].”

The NCI’s wealth of data could help DOE address the challenge of validating some of the tools that are developed for the new computing architectures across many length scales, says Kusnezov. “We run a simulation and compare it to data. But we don’t do it dynamically. The question is, If we had machine learning that was tuned to dynamic streams of data, could we run it in parallel in our large simulations to try to dynamically validate our codes across length scales in real time?”

More about the authors

David Kramer, dkramer@aip.org