A broad acoustic bandwidth helps listeners understand fragmented speech

DOI: 10.1063/PT.3.4404

Fortunately for the 50 million Americans who experience hearing loss, hearing aids have improved since the cumbersome, hand-held trumpets of the late 18th century. Back then, mechanically amplifying any and every sound frequency was the only option.

Today, audiologists know that loss of sensitivity to sound first becomes measureable at frequencies around 4000–8000 Hz—much higher than the dominant frequencies in normal speech, which range from around 100–1000 Hz. Most modern hearing aids work by providing frequency-dependent amplification based on the severity of an individual’s loss at each frequency. That approach works well in quiet settings.

However, in noisy settings, listeners also need to distinguish competing talkers and interpret speech based on a few heard fragments—a problem that extends beyond amplification alone. (See the article by Emily Myers, Physics Today, April 2017, page 34

The importance of extremely high-frequency sounds may not be immediately apparent in daily life, but even mundane speech contains important information at high frequencies. For example, the fricative consonants “th,” “s,” and “f” have energy beyond 4000 Hz when spoken, so words containing those consonants may be difficult to distinguish for someone who is beginning to experience high-frequency hearing loss. Luckily, speech is a highly redundant signal; robust information in one frequency band can compensate for lost information in another, and the brain can often fill in the gaps from what’s left.

How the brain uses high-frequency cues to understand speech in noisy settings remains a puzzle. However, Virginia Best and colleagues at Boston University have made a new and significant observation: Systematically removing sound energy at high frequencies can have a dramatic impact on understanding speech that’s partially masked by competing talkers. 1

Sounds of silence

When multiple people talk simultaneously, coincident sounds lead to portions of time at which the speech of interest is blocked at some or all frequencies. The remaining moments, during which the speech of interest stands out in the acoustic mixture, are known as “glimpses.” Glimpses arise when the background noise decreases or when the volume of the talker of interest increases. Listeners use glimpses, along with contextual information, to fill the gaps in acoustic information and construct a complete audible picture. 2

Most of audiology researchers’ understanding about glimpsed speech comes from studies that measure a subject’s comprehension of speech that is periodically interrupted by silence or disturbing sounds. In those studies, listeners with hearing loss performed poorly compared with those with normal hearing.

Comprehending speech that includes competing talkers presents a special challenge because extraneous speech sounds very similar to the target. In recent studies, subjects listened to the fragments of conversations in which the target talker’s voice dominated the acoustic mixture. 3 Those studies suggested that the main auditory difficulty arises not from isolating the target speech, but from understanding the target message based on a version with bits and pieces missing.

Other auditory studies have investigated the importance of high frequencies on a listener’s ability to understand uninterrupted speech. 4 Those studies determined how many syllables a listener could correctly identify in sentences with various frequencies filtered out. The results suggested that speech intelligibility is aided by a broad bandwidth that includes frequencies above 3000 Hz.

A few words

Best and her colleagues hypothesized that a loss of audibility at high frequencies would influence a listener’s ability to understand glimpsed speech more readily than uninterrupted speech. To test the hypothesis and understand why hearing loss has such a dramatic effect in multi-talker environments, the researchers gave normal-hearing adults recordings created from three sentences presented simultaneously. Unlike previous work, the recordings preserved only the glimpses of the target sentence that remained after filtering out segments that were masked by the competing talkers.

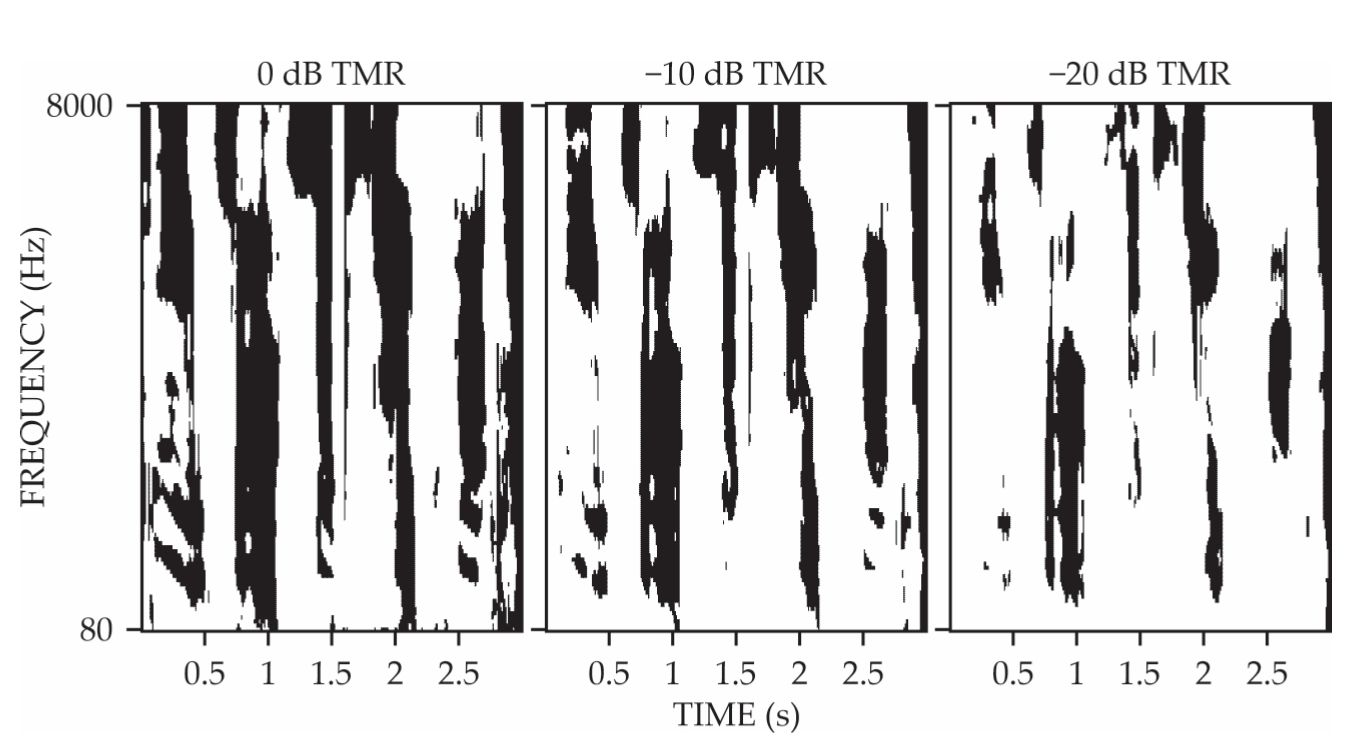

To vary the number of available glimpses, test subjects were presented with portions of target speech that were created from competing talkers. Progressively louder competing sentences produced increasingly negative target-to-masker ratios (0, −10, and −20 dB) that corresponded to how much quieter the target was than the competition. In the loudest mixture, the speech of interest rarely dominated the acoustic mixture—that is, the glimpses were short and few; in the quieter mixtures, the glimpses were longer and more numerous, as illustrated in figure

Figure 1.

Listeners identified words based on glimpses of target sentences that were masked by two competing sentences, for a total of three overlapping sentences, at target-to-masker ratios (TMRs) of 0, −10, and −20 dB. The black regions indicate the times and frequencies at which the target speech was more intense than the competing speech. (Adapted from ref.

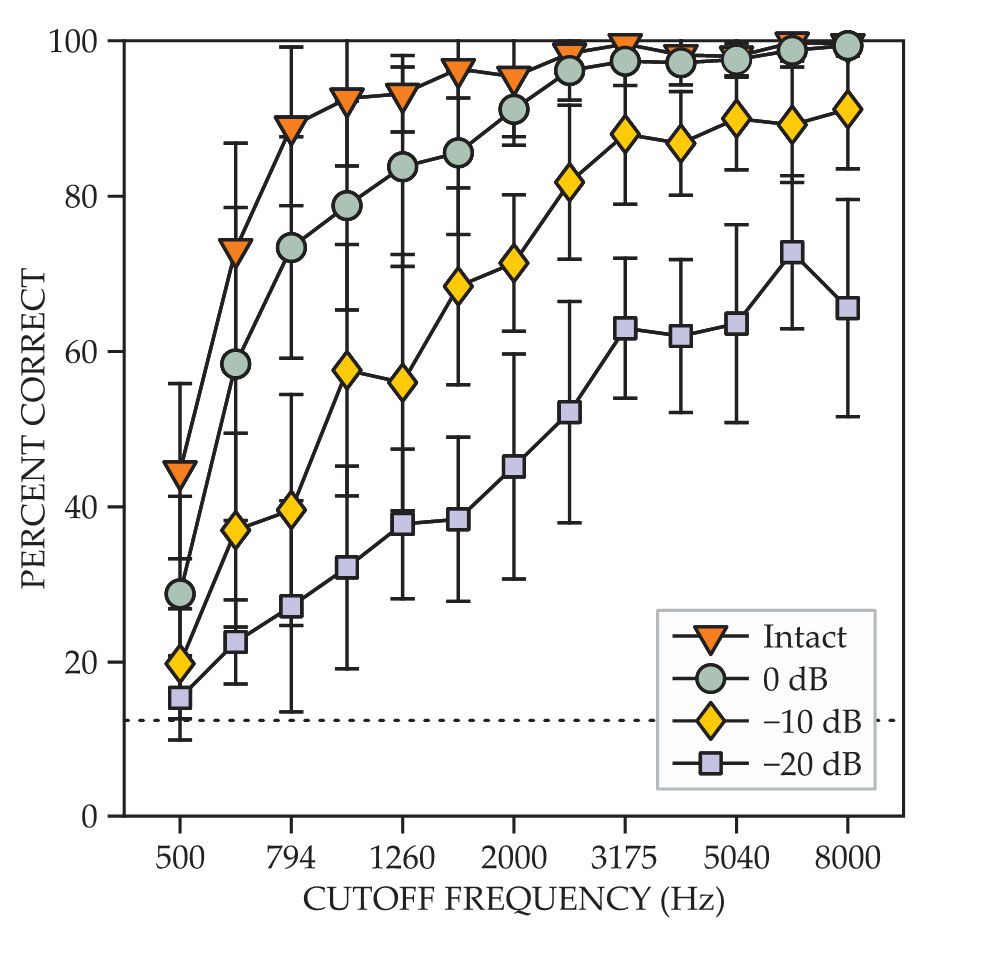

In each listening situation, Best and colleagues measured speech intelligibility by scoring the listeners on the words that they reported hearing. The researchers used those scores to determine the bandwidth at which a loss of high-frequency information affected intelligibility. The “minimum bandwidth” describes the bandwidth required to obtain a specified level of performance, defined by Best’s team as understanding 90% of the words. A lower minimum bandwidth corresponds to better intelligibility. 5

Analysis of available speech information confirmed that the proportion of time filled by glimpses declined as the magnitude of target-to-masker ratio increased and as the high-frequency content decreased. Speech intelligibility, in turn, declined systematically with increasingly negative target-to-masker ratio or with lower cutoff frequencies, as shown in figure

Figure 2.

For intact and glimpsed speech at three different target-to-masker ratios, listeners reported the words that they heard. The percent correct began to decline at cutoff frequencies that were higher for the glimpsed speech than for the intact speech. Cutoff frequencies were chosen by dividing the range 500–8000 Hz into third-octave steps. (Adapted from ref.

Hearing loss often starts at the highest frequencies and works its way down. The new findings suggest that those high frequencies are more important for comprehension in the presence of competing talkers than in quiet situations. The results may be an important consideration for improving hearing aid design. Unfortunately, although providing more gain at high frequencies can improve audibility, the added loudness can also cause aural discomfort. Further research could help identify new ways of providing customized amplification that are adapted for different people and listening situations; such customization should result in both better comprehension and greater comfort.

References

1. V. Best et al., J. Acoust. Soc. Am. 146, 3215 (2019). https://doi.org/10.1121/1.5131651

2. G. Kid et al., J. Acoust. Soc. Am. 140, 132 (2016). https://doi.org/10.1121/1.4954748

3. V. Best et al., J. Acoust. Soc. Am. 141, 81 (2017). https://doi.org/10.1121/1.4973620

4. B. C. J. Moore, Int. J. Audiol. 55, 707 (2016). https://doi.org/10.1080/14992027.2016.1204565

5. A. B. Silberer, R. Bentler, Y. H. Wu, Int. J. Audiol. 54, 865 (2015). https://doi.org/10.3109/14992027.2015.1051666