The pursuit of reliable earthquake forecasting

DOI: 10.1063/pt.tdwq.nppx

Damage in Turkey from the 2023 earthquake that struck Turkey and Syria. (Photo by Doruk Aksel Anıl/Pexels.)

Earthquakes—a subject of fear and fascination—are among nature’s most destructive phenomena, capable of causing widespread devastation and loss of life. They occur through the sudden release of gradually accumulated tectonic stress in Earth’s crust. The faults that host earthquakes are part of a complex system with many unknown or unknowable parameters. Small changes in the subsurface can lead to large changes in seismic activity. Faults behave unpredictably, even in laboratory settings. Indicators of forthcoming earthquakes, such as foreshocks, occur inconsistently.

Despite advances in seismology, accurately predicting the time, location, and magnitude of an earthquake remains difficult to achieve. The slow buildup of stress along faults is challenging to model and to measure. The influence of background conditions, static and dynamic stress transfer, and past earthquake history are also hard to quantify. That unpredictability, combined with the infrequent occurrence and rapid onset of earthquakes, makes taking action to prepare for them uniquely difficult.

The advent of big data and AI has created exciting possibilities for identifying new features in vast amounts of seismic data that might portend forthcoming earthquakes. The use of such technological advances in earthquake forecasting and prediction, however, is in its early stages. Integrating AI and big data into seismology will, at a minimum, provide a more complete view of seismic activity. But it also has the potential to lead to breakthroughs that could help manage or mitigate earthquake risk.

Although the terms “prediction” and “forecasting” may seem interchangeable, seismologists make an important distinction between them. Earthquake prediction aims to identify the time, location, and magnitude of a future earthquake with enough determinism to inform targeted actions. For example, an earthquake prediction might state that a magnitude 7.0 earthquake will occur in San Francisco on 15 July 2050 at 3:00pm. That level of specificity would enable city officials to order evacuations and take other steps to protect residents.

Earthquake early warning (EEW) systems are sometimes conflated with earthquake prediction, but they are not a prediction tool. EEW systems detect the first energy released by an earthquake—after fault rupture is already underway—and then issue alerts that can provide seconds to minutes of warning before strong ground shaking arrives. Though EEW systems cannot predict earthquakes before they start, they can provide valuable time for people to take protective actions, such as seeking cover or stopping hazardous activities.

Earthquake forecasting, in contrast, offers a probabilistic description of earthquakes within a specified region and time frame. For example, a forecast might report a 20% probability of a magnitude 6.0 or greater earthquake occurring in Southern California in the next 30 years. Though such information is valuable for long-term planning and risk assessment, it doesn’t enable the same level of targeted action as a prediction. Official forecasts are currently issued by many governmental agencies. Long-term forecasts inform building codes and insurance rates. Aftershock forecasts, which can include regional warnings of a short-term increase in the probability of damaging earthquakes, inform the public and first responders. Many efforts are underway to improve probabilistic forecasting, and AI is beginning to be included in them.

Traditional forecasting approaches

Currently, most of the aftershock forecasts issued by governmental bodies worldwide rely on statistical models that analyze earthquake clustering patterns. Statistical approaches use mathematical analysis of past seismicity—the frequency and intensity of earthquake activity in a given region over a period of time—to forecast earthquakes. One statistical approach, known as point-process modeling, considers earthquakes as points in time and space and uses a conditional intensity function to characterize the probability of an event occurring at a specific time and location given the history of past events.

The epidemic-type aftershock sequence (ETAS) approach is a widely used point-process model that captures complex patterns of earthquake occurrence by quantifying the stochastic nature of earthquake triggering.

1

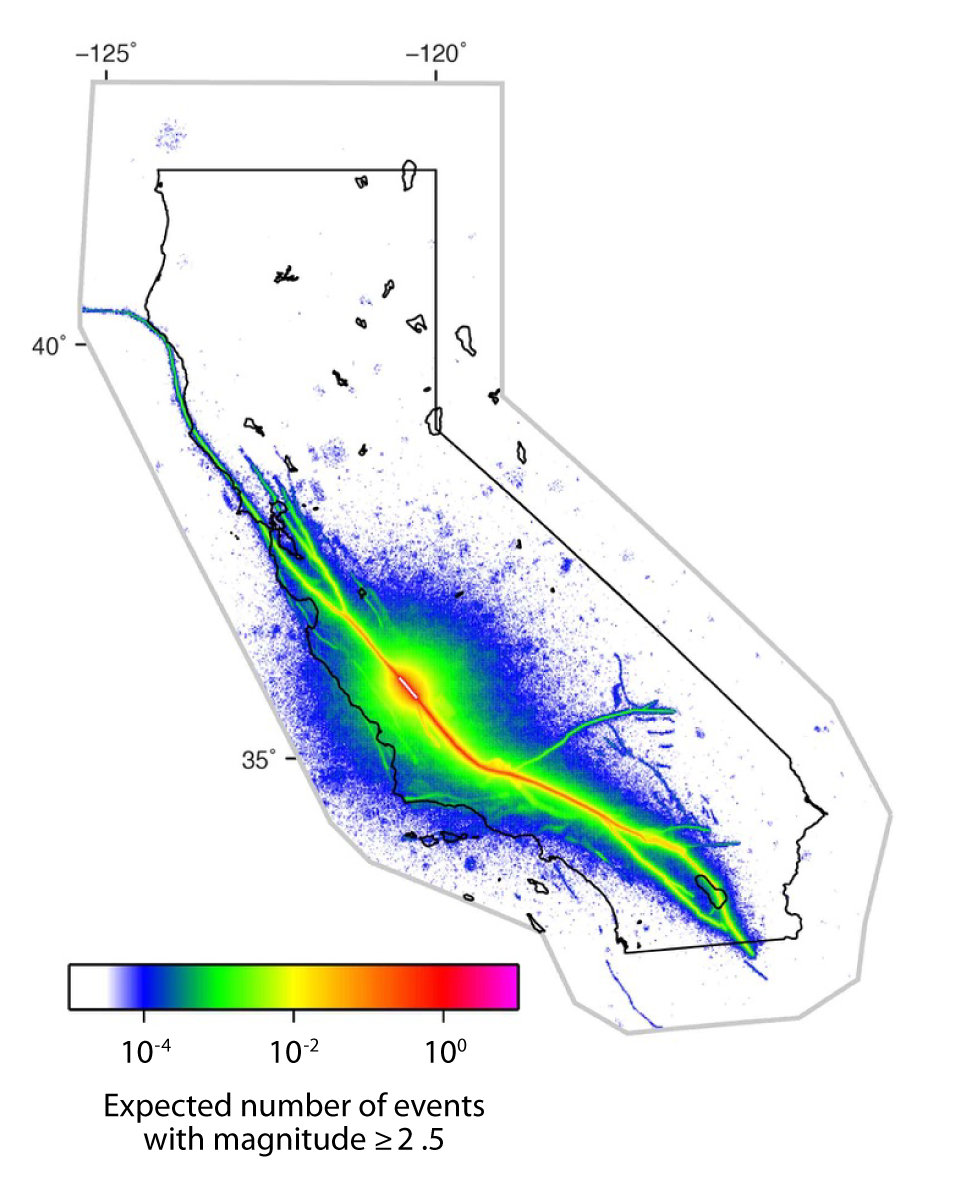

ETAS models treat earthquakes as a self-exciting process, in which one event can trigger others. They combine random background seismicity with triggered events and assume that the rate of triggering declines over time. Figure

Figure 1.

An aftershock forecast for a 7-day period following a hypothetical magnitude 6.1 earthquake (shown as a white line surrounded by red) on the San Andreas Fault in California. The forecast reflects the probability of aftershock events triggered by the initial event. It was made with a statistical model known as an epidemic-type aftershock sequence (ETAS), which treats earthquakes as a self-exciting process. ETAS modeling is a traditional approach to earthquake forecasting. This ETAS model also incorporates known fault geometry, which makes it more robust than a purely statistical forecast. It was generated as part of the Uniform California Earthquake Rupture Forecast, Version 3. (Adapted from E. H. Field et al., Seismol. Res. Lett. 88, 1259, 2017

Physics-based approaches use knowledge from continuum mechanics and friction theory to forecast earthquakes. Such models consider elastic deformation, imparted by previous earthquakes and other physical processes, that modifies the stresses on nearby faults and can trigger subsequent events. They combine estimates of elastic stresses with laws describing how the rate of seismicity varies in response to stress changes, and they assume that experimentally constrained friction laws are applicable.

Because earthquake processes are incompletely understood and difficult to observe, models that accurately describe all aspects of earthquake behavior are elusive. Point-process models emphasize direct triggering between earthquakes, and as such, they are particularly suitable for aftershock forecasting. (See figure

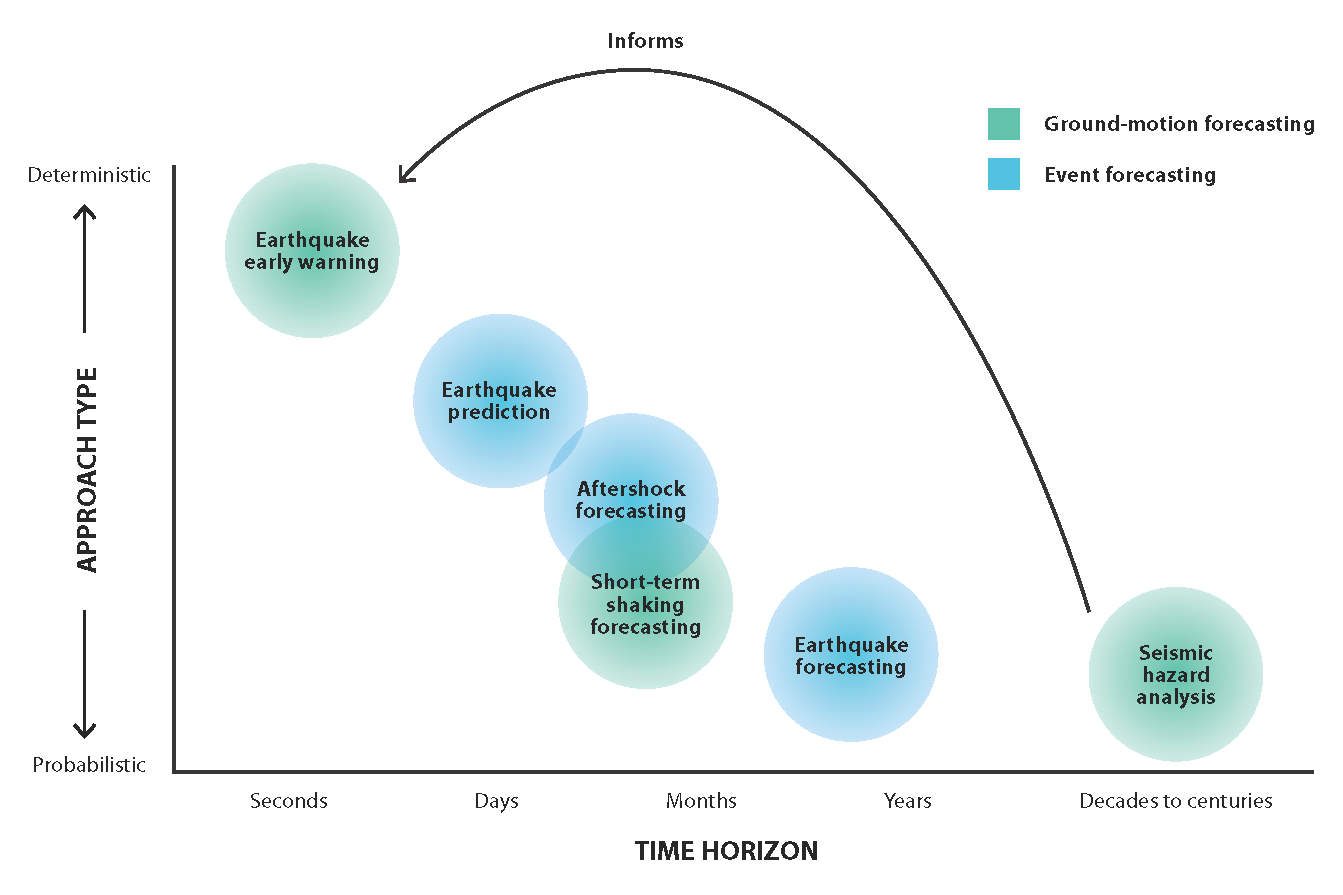

Figure 2.

Seismic hazard characterization methods span a range of time horizons. Ground-motion forecasting approaches (green) include earthquake early warning (EEW), short-term shaking forecasting, and seismic hazard analysis (SHA). Event forecasting approaches (blue) include earthquake prediction, aftershock forecasting, and earthquake forecasting. SHA, which quantifies the strength of earthquake shaking likely to occur in the future, is used to identify regions with high seismic hazard and inform the development of EEW systems. Methods that overlap on the graph can be used together in an integrated approach. Wave propagation, used in EEW, and long-term plate boundary strain rates and historical seismicity patterns, used in SHA, are well-understood physics with high predictability. In contrast, the physics behind short- to medium-term forecasting is not as well understood, which results in lower predictability for those approaches.

The fact that purely statistical models often outperform physics-based models indicates that there is unrealized progress to be made in earthquake forecasting. Physics-based models require reliable estimates of stress changes, material properties of the crust, and fault friction and a detailed knowledge of the fault system’s geometry. When such information is available, physics-based models can compete with ETAS models, particularly for estimating seismicity rates far from the mainshock in space and time. 2 That has motivated the development of hybrid models that leverage the strengths of each type of approach. Hybrid models address limitations such as the lack of underlying physics in statistical models and uncertainties in parameter estimations in physics-based models and can yield better forecasting performance. 3

Both physics-based and ETAS models can be computationally expensive. Hybrid models, like physics-based models, require high-resolution observations, which are often not available. And both statistical and physical approaches use multiple parameters that can be challenging to estimate, especially in real-time settings.

From deterministic prediction to probabilistic forecasting

The history of earthquake science has seen repeated phases of growing insights punctuated by large earthquakes that highlight gaps in the community’s understanding. Through that historical progression, earthquake science has transitioned from seeking deterministic prediction to embracing probabilistic forecasting frameworks that acknowledge the inherent complexity of seismic processes.

This timeline illustrates the evolution of earthquake prediction science across six major eras.

Prescientific ideas about earthquakes include mythologies that are found in many regions. In Japan, for example, earthquakes were attributed to a giant underground catfish named Namazu (shown in the image above). Greek philosophers put forth ideas for how wind and water might cause earthquakes.

The early observation era (ancient times to 1890s) began with the creation of systems to characterize earthquake intensity and the development of tools to measure shaking. It culminated with Fusakichi Omori’s groundbreaking law that describes aftershock decay patterns.

The foundation era (1900s–50s) was launched by systematic multidisciplinary studies of the 1906 San Francisco earthquake that advanced understanding of fault mechanics and its role in earthquake generation. At the end of that phase, the Gutenberg–Richter law (1944) established the exponential relationship between earthquake frequency and magnitude.

The era of optimism (1960s–70s) saw further improvement to scientific understanding of earthquakes with the widespread acceptance of plate tectonic theory. The dilatancy–diffusion hypothesis put forth mechanisms of expansion and fluid flow in faults as earthquake precursors and brought hope that geophysical observations could lead to predictions. The era reached its peak with the successful evacuation before the 1975 earthquake in Haicheng, China, only to be challenged by the country’s devastating unpredicted 1976 Tangshan earthquake.

The insight era (1980s–90s) saw the emergence of probabilistic forecasting—epidemic-type aftershock sequence models and public aftershock forecasts in California—after several deterministic predictions failed. Scientists tried to monitor an earthquake they predicted would occur in Parkfield, California, before 1993, but the next major quake in the region wasn’t until 2004. The Coulomb rate-and-state model better established the physics underlying earthquakes by relating crustal stress, fault friction, and earthquake nucleation.

The open data era (2000s) featured international collaboration. Better instrumentation led to the discovery of slow earthquakes and tectonic tremors in subduction zones. The seismology community was rattled, though, by the 2009 L’Aquila earthquake in central Italy and subsequent legal prosecution of scientists for forecasts that were judged to be misleading. The controversy prompted Italy to develop an improved aftershock forecasting system.

The new dawn era (2010s–present) has been characterized by advanced data integration, machine-learning applications, and expanded operational forecasting. The search for precursory signals extended to the use of satellites to measure magnetic anomalies and ionosphere disruptions before and during large earthquakes.

Deep-learning approaches

The application of AI techniques, such as artificial neural networks, to seismology dates back to the late 1980s, an era of much initial excitement around machine learning. The recent rise of deep neural networks (DNNs; see Physics Today, December 2024, page 12

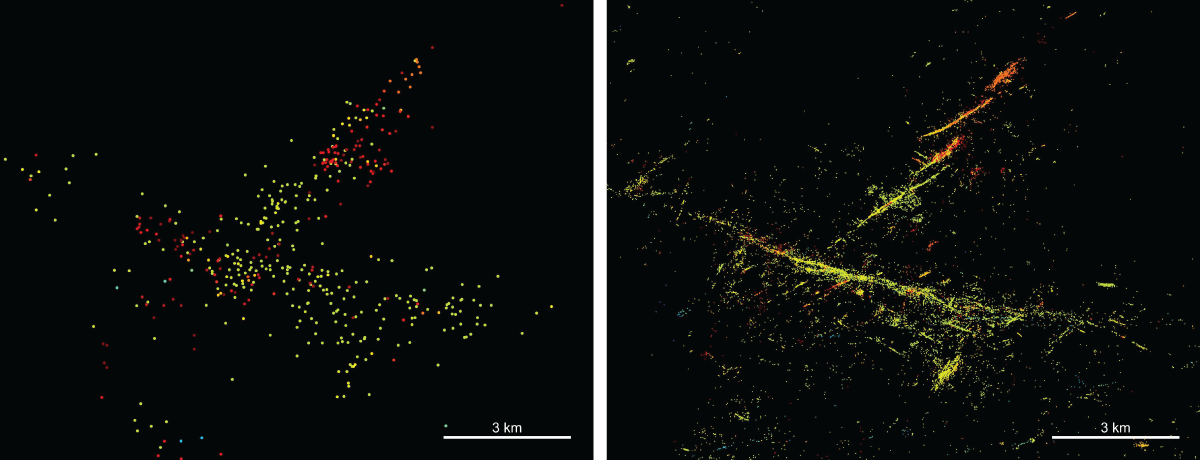

Convolutional neural networks are a class of DNNs that are primarily used for extracting features from grid-like data through the use of filters. Recurrent neural networks, on the other hand, are designed to process sequential data by maintaining an internal state that captures information from previous steps in the sequence. That allows them to model temporal dependencies and make predictions based on historical context. Deep-learning models have surpassed both classical and early machine-learning approaches in many seismological tasks—particularly in signal detection and phase picking (measuring the arrival time of seismic waves). That has led to the creation of more-comprehensive earthquake catalogs that include many more, previously undetected small events, as shown in figure

Figure 3.

Earthquake detection from seismological data has been vastly improved by the application of deep-learning algorithms. Earthquakes from the 2016 magnitude 5.8 swarm near Pawnee, Oklahoma, are represented as individual points, color coded by time of occurrence, with yellow representing earlier quakes and red representing later ones. The US Geological Survey Advanced National Seismic System Comprehensive Earthquake Catalog (left) contains earthquakes that were directly measured by standard seismological methods. A deep-learning-based earthquake catalog

The exceptional ability of neural networks to model complex relationships opens new avenues for data-driven modeling of seismicity. Neural temporal point-process (NTPP) models use recurrent neural networks to forecast the time evolution of sequences of events. For that reason, they are a natural choice to explore as a more flexible forecasting strategy than traditional statistical-based point-processing models. A key shift in the modeling approach is the move from relying on sparse seismicity indicators (used in early machine-learning forecasting models) to using the information of all individual earthquakes in earthquake catalogs. 6

Applications of NTPPs for earthquake rate forecasting 7 have thus far shown marginal improvement over ETAS models: NTPPs are more efficient and flexible, and the multimodularity of neural networks allows for incorporation of more information. 8 NTPP models require large training sets, however, which limits their applicability. The structured yet sparse nature of earthquake catalogs is an obstacle to training deep-learning models effectively. Incorporating spatiotemporal information from earthquake catalogs into the model-building process is also difficult. A recent trend in AI-based earthquake forecasting leverages the statistical power of ETAS models while incorporating the spatiotemporal sequence-forecasting capabilities of neural networks. 9 , 10 That allows the model to combine historical seismicity patterns and the established statistical principles of ETAS.

What AI can—and can’t—do

Machine learning offers powerful tools for analyzing complex data. But a combination of the inherent nature of earthquakes, limited knowledge of Earth’s interior conditions, and the constraints of current AI models poses challenges for using AI for earthquake forecasting.

Data requirements. One of the biggest difficulties is the nature of the data themselves. Deep-learning models, which gain the ability to predict by recognizing patterns, need massive amounts of data to train effectively. That poses a fundamental problem in earthquake forecasting, since major earthquakes are, thankfully, rare and may occur only once a century in a given location. The lack of historical data makes it difficult to train deep-learning models to predict major events. Even for smaller earthquakes, the data are often incomplete, especially in areas with limited seismic monitoring that detects only larger earthquakes. Though deep-learning-based earthquake monitoring has improved detection, only a few decades of high-quality digital data, even in well-monitored regions, are available. The lack of complete earthquake catalogs limits the ability to build effective forecasting models.

To overcome the problem of limited training data, researchers use such techniques as generating synthetic data from known physics and computer simulations. It’s crucial, however, that artificial data mirror the complexity of real earthquakes. Another strategy is to leverage transfer learning, in which a model trained on a large dataset from one geographic area is then fine-tuned using a smaller dataset from a region of interest. That approach could help improve models in areas with limited data.

Generalization of models to new regions. Another hurdle to the development of effective earthquake-forecasting models using AI is the diverse nature of earthquakes across regions. The frequency, magnitude, and patterns of seismic events vary significantly in different tectonic regimes, which makes developing universal models extremely difficult. A promising technique involves domain adaptation, in which a model trained in one region is translated to another region. But the best approach may be to develop models trained on data from multiple regions to enhance their ability to learn more general patterns and reduce the risk of overfitting to region-specific characteristics. It could be achieved by incorporating more physics-based features rather than relying solely on data-driven approaches that are region specific.

Model interpretability and transparency. A key challenge to using AI in earthquake forecasting is the black box problem: Deep-learning models can be incredibly complex and opaque, which makes it difficult to understand how they reach their predictions. That lack of interpretability is not only an obstacle for scientists trying to understand the underlying physical mechanisms of earthquakes, but it also hinders the public trust that is crucial for operational earthquake forecasting. Furthermore, without transparency, it becomes difficult to diagnose errors, identify model limitations, and understand the reasons behind incorrect predictions or biases.

Methods of explainable AI, commonly known as XAI, are being developed to shed light on the decision-making processes of AI models. Techniques such as feature-importance analysis can reveal which factors are most influential in a prediction and potentially aid in the identification of the primary physical mechanisms driving a seismic sequence. Additionally, incorporating existing domain knowledge, such as established physical laws, into AI models can enhance their interpretability and ensure that their results are plausible. Hybrid AI models, which combine deep learning with traditional forecasting approaches, can also offer a path toward greater explainability.

Pitfall benchmarking. Rigorous testing and benchmarking are essential for establishing the reliability and skill of any earthquake-forecasting model. That validation involves both retrospective testing, which evaluates a model’s performance on past data, and prospective (and pseudoprospective) testing, which measures its accuracy in predicting future seismic activity. Global community efforts, including the Collaboratory for the Study of Earthquake Predictability (CSEP) 11 , 12 and the Regional Earthquake Likelihood Models community forecasting experiment, 13 are working to facilitate those evaluations. And the Python library pyCSEP 14 allows researchers to efficiently apply standardized testing methods in their own research.

Despite the well-established standardized testing of operational earthquake forecasts, such testing has not been applied to most AI-based forecasts; that lack of testing raises concerns about the validity of their findings and scientific rigor. 15 The use of generic ETAS parameters that may not be transferable across different tectonic regimes is another commonly observed issue. 16 To ensure reliable evaluation, models need to be testable, contain clearly defined parameters, and be evaluated against well-tuned and state-of-the-art baselines. That requires prospective testing over extended periods and across multiple regions.

The earthquake-forecasting community recognizes the need for standardized tests but has yet to reach a consensus on the minimum requirements. There are several contributing factors, including the limitations of current evaluation methods and the recognition that models may still provide valuable information even if they fail specific tests. 12 Community-driven efforts to share source codes and prospective forecasts, along with platforms like CSEP, are crucial steps toward establishing robust, standardized earthquake-forecasting benchmarking. 17 CSEP provides a valuable platform to evaluate model performance in retrospective and prospective modes, collect data, and compare results across various models.

Ethical considerations. Communication of earthquake forecasts, especially probabilistic ones, in a way that the public can understand and appropriately act on presents a significant ethical challenge. Unlike weather forecasts, which people may expect to provide precise predictions of time and location, earthquake forecasts are inherently uncertain. That can lead to confusion, anxiety, and potentially dangerous responses from the public.

It will require careful consideration of several factors to address the ethical implications of AI-based earthquake forecasting. Privacy concerns must be balanced with the need for data to develop accurate models, and potential biases in the data or forecasting algorithms must be identified and addressed to ensure equitable outcomes for all communities. Clear communication using plain language is essential to avoid misunderstandings and to ensure that the public can interpret forecasts accurately. Managing public expectations is crucial; it requires emphasis on the probabilistic nature of earthquake forecasting and its inherent uncertainties.

Forecasts should also include clear, actionable guidance on how to prepare for and mitigate earthquake risks. Maintaining public trust requires transparency about the limitations of AI models and the uncertainties associated with any forecast. Finally, effective communication must be sensitive to cultural differences and variations in risk perception to ensure that forecasts are accessible and relevant to diverse populations.

A data-driven future

Probabilistic earthquake forecasting, in contrast to deterministic earthquake prediction, is a rapidly evolving field. Advances in technology and data analysis, particularly the incorporation of AI techniques, are driving the development of more-sophisticated forecasting models. Advances in sensor technology and the expansion of dense seismic networks are providing new insight into the dynamics of Earth’s crust. That wealth of data enables the creation of more detailed and nuanced forecasting models that better capture the complexities of earthquake processes. A data-centric approach to AI-based earthquake forecasting allows for the incorporation of potentially unknown earthquake physics into the modeling process.

The multimodality of deep-learning methods can enable simultaneous processing of diverse sensor data, such as seismic, electromagnetic, and geodetic information. The flexibility and data-fusion capabilities of AI models allow for the implementation and testing of different hypotheses and may facilitate a more comprehensive understanding of earthquake processes. As forecasting methods continue to evolve, they hold the potential to improve earthquake preparedness, response, and resilience, all of which will remain vital for the mitigation of earthquake risk.

This article was originally published online on 16 July 2025.

References

1. J. L. Hardebeck et al., Annu. Rev. Earth Planet. Sci. 52, 61 (2024).https://doi.org/10.1146/annurev-earth-040522-102129

2. S. Mancini et al., J. Geophys. Res. Solid Earth 124, 8626 (2019).https://doi.org/10.1029/2019JB017874

3. C. Cattania et al., Seismol. Res. Lett. 89, 1238 (2018).https://doi.org/10.1785/0220180033

4. S. M. Mousavi, G. C. Beroza, Science 377, eabm4470 (2022).https://doi.org/10.1126/science.abm4470

5. S. Mancini et al., J. Geophys. Res. Solid Earth 127, e2022JB025202 (2022).https://doi.org/10.1029/2022JB025202

6. A. Panakkat, H. Adeli, Int. J. Neural Syst. 17, 13 (2007);https://doi.org/10.1142/S0129065707000890

G. C. Beroza, M. Segou, S. M. Mousavi, Nat. Commun.. 12, 4761 (2021);https://doi.org/10.1038/s41467-021-24952-67. K. Dascher-Cousineau et al., Geophys. Res. Lett. 50, e2023GL103909 (2023);https://doi.org/10.1029/2023GL103909

S. Stockman, D. J. Lawson, M. J. Werner, Earth’s Future 11, e2023EF003777 (2023);https://doi.org/10.1029/2023EF0037778. O. Zlydenko et al., Sci. Rep. 13, 12350 (2023).https://doi.org/10.1038/s41598-023-38033-9

9. C. Zhan et al., IEEE Trans. Geosci. Remote Sens. 62, 5920114 (2024).https://doi.org/10.1109/TGRS.2024.3424881

10. H. Zhang et al., Geophys. J. Int. 239, 1545 (2024).https://doi.org/10.1093/gji/ggae349

11. D. Schorlemmer et al., Seismol. Res. Lett. 89, 1305 (2018).https://doi.org/10.1785/0220180053

12. L. Mizrahi et al., Rev. Geophys. 62, e2023RG000823 (2024).https://doi.org/10.1029/2023RG000823

13. J. D. Zechar et al., Bull. Seismol. Soc. Am. 103, 787 (2013).https://doi.org/10.1785/0120120186

14. W. H. Savran et al., J. Open Source Softw. 7, 3658 (2022).https://doi.org/10.21105/joss.03658

15. K. Bradley, J. A. Hubbard, “More machine learning earthquake predictions make it into print,” Earthquake Insights, 4 December 2024.https://doi.org/10.62481/bd134329

16. L. Mizrahi et al., Bull. Seismol. Soc. Am. 114, 2591 (2024).https://doi.org/10.1785/0120240007

17. A. J. Michael, M. J. Werner, Seismol. Res. Lett. 89, 1226 (2018).https://doi.org/10.1785/0220180161

18. Y. Park, G. C. Beroza, W. L. Ellsworth, Seism. Rec. 2, 197 (2022).https://doi.org/10.1785/0320220020

More about the authors

S. Mostafa Mousavi is an assistant professor of geophysics at Harvard University in Cambridge, Massachusetts. Camilla Cattania is an assistant professor of geophysics at MIT, also in Cambridge. Gregory C. Beroza is the codirector of the Statewide California Earthquake Center, headquartered in Los Angeles, and is the Wayne Loel Professor of Earth Science at Stanford University.